Turkey trotted out its military drones last week at Istanbul’s Teknofest, one of the world’s largest technology festivals. The country’s drone force made controversial headlines when a United Nations report in March found that a Turkish Kargu-2 drone had hunted down and killed an enemy combatant without any specific instructions in March 2020.

The machine was “operating in a ‘highly effective’ autonomous mode,” The New York Post reported. This allowed it to strike without direct oversight. The machine followed its programming without any express input from a human monitor.

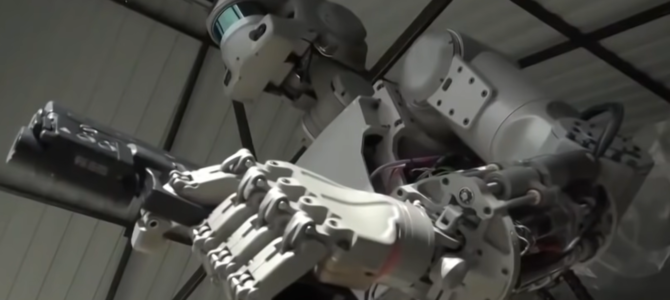

Meanwhile, researchers are developing robots that can accurately mimic a human being’s movements. YouTube videos of the bi-pedal Atlas robots from Boston Dynamics – a design company related to the Massachusetts Institute of Technology– show the robots performing parkour-like maneuvers and picking up boxes, with the scientists occasionally shoving them over and pulling the box away. Atlas will then go back to pick up the box with single-minded determination.

Any concerns about Atlas going rogue may be theoretical, but the Turkish drone story spotlights a real-life hazard. A machine – no matter how sophisticated – that is programmed to destroy will hold fast to its mission. It has no understanding of or concern for morality; life means nothing to it.

All that matters to a robot is following its programmed course the developers prepared to fill its function. The recent drone strike launched by the Biden administration that killed 10 Afghan civilians, including seven children, serves as the most recent example of a drone’s inability to distinguish a mission gone wrong.

Why are researchers and their benefactors, many of whom are in government positions, so eagerly going down this path? Do they not see the risks, the inherent danger? Have they not considered the consequences – for themselves as well as others?

In the 2011 film “Captain America: The First Avenger,” we see Dr. Erskine, after revealing the fate of his first test subject, ask Steve Rogers to make a particular pledge. “Whatever happens tomorrow you must promise me one thing,” he says, “that you will stay who you are. Not a perfect soldier, but a good man.”

Too many people in government desire the perfect soldier. They seek control and believe the perfect soldier, whether he is made of flesh and blood or metal, will allow them to achieve it. He will never question them, never bother about morality, human rights, or the worth of another human being. We’ve seen the consequences of this groupthink among top military brass in the botching of the Afghanistan withdrawal, where seemingly no one in command questioned decisions like shutting down Bagram Air Base until it was too late.

History, particularly over the last century, shows us the perfect human soldier. The perfect soldier does what he is told, when he is told, and follows his orders to the letter. He never questions orders or the authority of those above him in the chain of command, regardless of whether the task or the individual mission presented to him is morally right or wrong.

In essence, the perfect soldier is a robot. He will follow orders – up to and including those that lead to genocide – without batting an eye.

A good man, on the other hand, can and often does make a great soldier, in part because he must respect morality. This will naturally lead him to concern himself with his neighbors’ well-being and to strive to always do the right thing, no matter the cost to himself.

Marine Lt. Col. Stuart Scheller, who publicly called upon Gen. Mark Milley and Gen. Frank McKenzie to account for their poor leadership and lack of accountability in the Afghanistan withdrawal, is an example of the good man concept. Scheller was relieved of his command and is currently in the brig in “pretrial confinement” for violating a gag order placed upon him. He questioned his commanders’ integrity and patriotism, and it has cost him dearly.

For those who seek the domination of others, a good man is a nightmare. He is competent to weigh the orders of his superiors, as both he and his officers know there are endless variations or permutations between the goal and its achievement.

A famous example is that of Marcus Luttrell and his fellow SEALs, who decided to spare Afghan villagers who discovered them, even though the villagers were possibly allied with the Taliban. Rather than kill the men to safely accomplish their task, the SEALs let them go peacefully, and later suffered an ambush of which only Luttrell survived.

If a good man finds his orders or mission violates the rights of innocents, he will absolutely refuse to cooperate with them. He may even turn against his “superiors” when they choose to murder others to secure their own power.

Whether they are man or machine, perfect soldiers are predictable, unquestioningly obedient, and silent unless spoken to first. Good men, on the other hand, are entirely irregular. They may smile at something they are not supposed to, query their “superiors” about things those men would rather not admit to, and even break with their orders if they decide it is right to do so.

As technology advances in this century, it behooves us to ask: Do we want perfect soldiers or good men standing in our defense? Do we want a world full of autonomous drones? Or do we want a world that can produce at least one Captain America? Man’s present, and by extension his future, hinges on the answer.