Facebook users started receiving notifications Thursday in a new campaign to “provide resources and support to people on Facebook who may have engaged with or were exposed to extremist content or may know someone who is at risk.”

The Big Tech giant is sending out two notifications to certain users. One asks, “Are you concerned that someone you know is becoming an extremist?” The Facebook message then goes on to suggest that “you can help” by joining their support group. “Hear stories and get help from people who have escaped violent extremist groups.”

People all over Facebook have been getting these https://t.co/0X4MvgCvnU

— Jack Poso 🇺🇸 (@JackPosobiec) July 1, 2021

The other notification reads, “You may have been exposed to harmful extremist content recently.” The message continues, “Violent groups try to manipulate your anger and disappointment. You can take action now to protect yourself and others.” Finally, Facebook prompts users to “Get support from experts.”

The new initiative is being carried out via a partnership between Facebook and “Life After Hate,” an organization Facebook says, “provides support to anyone who wants to leave hate behind and solve problems in nonviolent ways.”

This, however, is not what “Life After Hate” says on their website, which reads: “‘Life After Hate’ is committed to helping people leave the violent far-right to connect with humanity and lead compassionate lives.” The group asserts that “Today, far-right extremism and white supremacy are the greatest domestic terror threats facing the United States.”

“Life After Hate,” which “partners with [tech companies] to identify and defuse potentially violent extremists online” received a $400,000 federal grant from the Obama administration, but had it rescinded under the Trump administration. Additionally, according to “Life After Hate’s” website, race hustler and former football quarterback Colin Kaepernick donated $50,000 to the organization in 2017.

Under “You may have been exposed to harmful extremist content recently,” Facebook prompts users to “get support from experts,” which leads to a section asking, “What arguments do violent groups use to gain followers?” Examples of arguments from violent groups include “violence is the only way to achieve change,” and “minorities are destroying the country.” Under each example are bullet points where Facebook debunks each argument.

When asked by The Federalist, a Facebook spokesperson refused to answer how it defines extremism or what it defines as “far-right.”

Another big question is if Facebook is trying to curb “extremism,” why are they partnering with a group that solely focuses on “far-right violence.” The Federalist asked Facebook whether they are also surveilling and combating left-wing extremism. They would not say.

More explicitly, The Federalist asked whether or not Facebook would be censoring Antifa content and notifying those who have been exposed to it, given the leftist anarchist group has for years been so violent that former Homeland Security Secretary Chad Wolf said they meet the standards of a “domestic terrorist group.” Facebook would not say.

“Black Lives Matter” is another major violent left-wing organization that is linked to up to 95 percent of 2020 U.S. riots. Facebook would not say whether BLM falls under extremism, if Facebook is censoring BLM-related content, or if Facebook is notifying users who have been exposed to BLM-related content.

A Facebook spokesperson instead told The Federalist that the notifications were part of a test running in the US that “is a pilot for a wider, global approach to radicalization prevention,” and is connected to Facebook’s “Redirect Initiative.” Facebook’s website says their “Redirect Initiative” “helps combat violent extremism and dangerous organizations by redirecting hate and violence-related search terms towards resources, education, and outreach groups that can help.”

The new extremism notifications are also connected to the Christchurch Call to Action, an organization that “outlines collective, voluntary commitments from Governments and online service providers intended to address the issue of terrorist and violent extremist content online and to prevent the abuse of the internet…”

“We continue to work with expert academic and NGO partners to develop this important work, and to share knowledge and expertise through GIFT,” continued the spokesperson. GIFT, the Global Internet Forum to Counter Terrorism, is an organization that, according to their website, develops surveillance technology for “companies committed to preventing terrorist and violent extremists from exploiting their platforms while protecting human rights.”

“We identify users who have been potentially exposed to violating content and other users who have been the subject of prior enforcement,” the Facebook spokesperson said. “We are providing these additional resources to give people exposed to this content more information and help others intervene or talk to friends or family off-platform.”

The new notifications come after tech giants like Facebook have come under fire for political censorship. Indeed, the Facebook warning messages are being perceived by many as a direct attack on free speech and further targeting and suppressing conservatives on the platform.

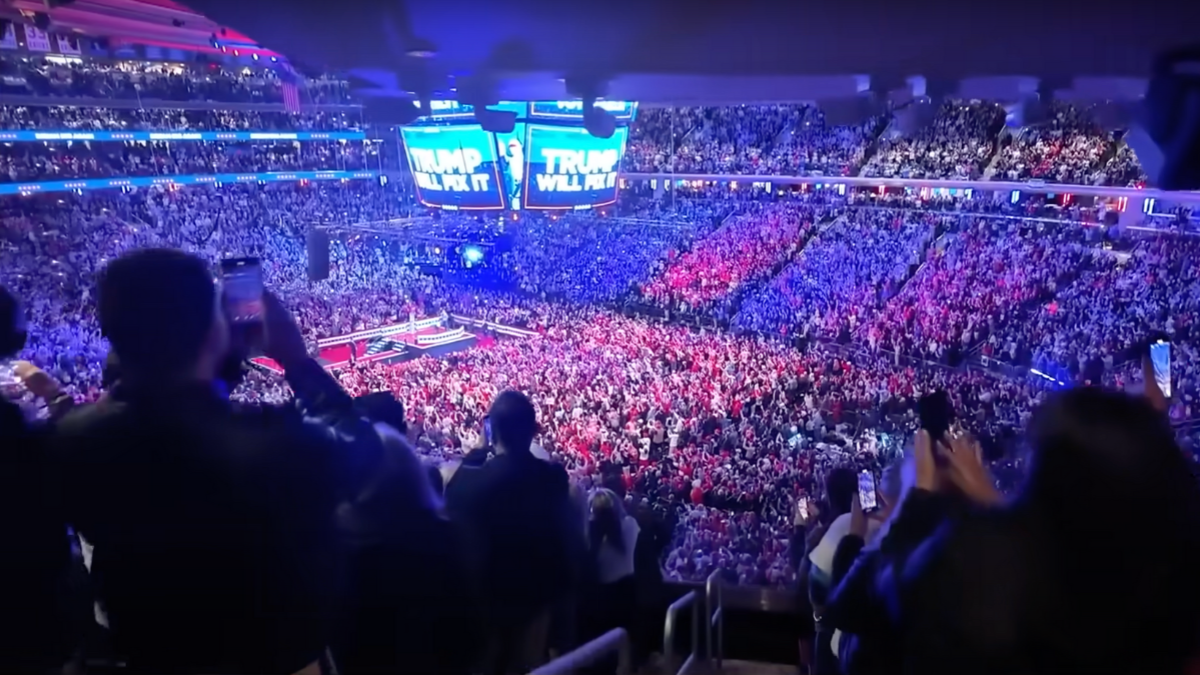

One of the most pointed examples of Facebook’s campaign against conservative thought is its ban on former President Donald Trump, who the company said will remain banned on its platforms, including Instagram, through the 2022 midterms until January 2023. With no evidence, Nick Clegg, Facebook’s vice president of global affairs, said that the former president is a “risk to public safety.”

If former President Trump is a “risk to public safety,” then does that mean his supporters are too? Facebook wouldn’t say. However, it is obvious from their partnership with “Life After Hate” and from those who reported that they received either notification that the people Facebook is identifying as “extremist” are conservatives.

#Facebook I have a long list of leftist extremists that I would love to report to your #FacebookBrownShirts but I doubt that's the extremism you are alluding to…. pic.twitter.com/tIcFkEg39m

— Peter Boykin For North Carolina (@Boykin4Congress) July 1, 2021

Many are arguing this new development is yet another example of why Section 230 of the federal Communications Decency Act should be revoked. Section 230 serves as a liability shield for online social media companies, like Facebook, that are increasingly acting more like publishers, not platforms.

Yeah, I’m becoming an extremist. An anti-@Facebook extremist. “Confidential help is available?” Who do they think they are?

Either they’re a publisher and a political platform legally liable for every bit of content they host, or they need to STAY OUT OF THE WAY. Zuck’s choice. pic.twitter.com/AImMAcnPAv

— Alex Berenson (@AlexBerenson) July 1, 2021